Abstract

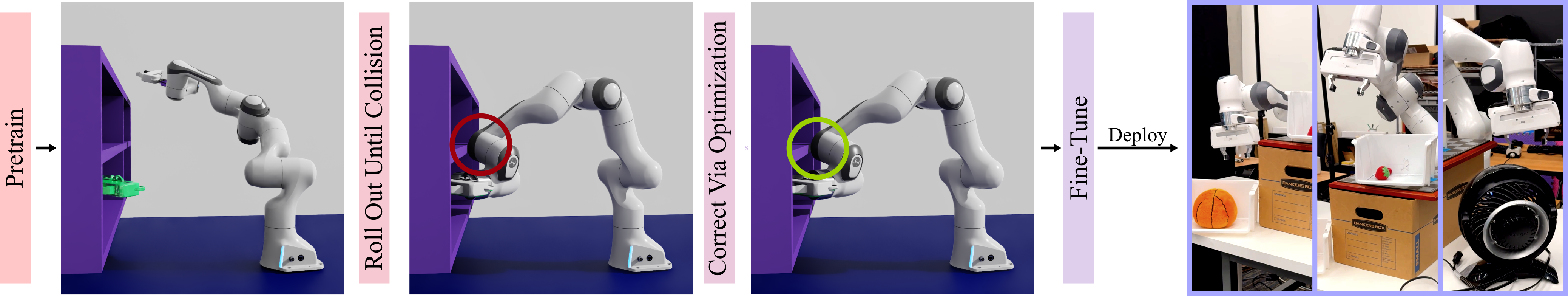

The world is full of clutter. In order to operate effectively in uncon- trolled, real world spaces, robots must navigate safely by executing tasks around obstacles while in proximity to hazards. Creating safe movement for robotic manipulators remains a long-standing challenge in robotics, particularly in environments with partial observability. In partially observed settings, classical techniques often fail. Learned end-to-end motion policies can infer correct solutions in these settings, but are as-yet unable to produce reliably safe movement when close to obstacles. In this work, we introduce Avoid Everything, a novel end-to-end system for generating collision-free motion toward a target, even targets close to obstacles. Avoid Everything consists of two parts: 1) Motion Policy Transformer (MπFormer), a transformer architecture for end-to-end joint space control from point clouds, trained on over 1,000,000 expert trajectories and 2) a fine-tuning procedure we call Refining on Optimized Policy Experts (ROPE), which uses optimization to provide demonstrations of safe behavior in challenging states. With these techniques, we are able to successfully solve over 63% of reaching problems that caused the previous state of the art method to fail, resulting in an overall success rate of over 91% in challenging manipulation settings.

Acknowledgements

This research was made possible by the generosity, help, and support of many. Among them, we would like to thank the following people for their contributions. Thank you Chris Xie for sharing your knowledge of transformers generally, as well as how to apply them effectively for 3D vision. Thank you Mitchell Wortsman for your help in tuning our optimizer to have useful loss curves. Thank you Jiafei Duan and Neel Jawale for your help with the real robot infrastructure and experiments. Thank you Daniel Gordon for sharing your wealth of deep learning expertise. Thank you Zak Kingston for your help improving and optimizing our expert pipeline. Thank you Rosario Scalise and Matthew Schmittle for helping to brainstorm our planner design. Thank you Adithyavairavan Murali for sharing your expertise on hard negative mining. Finally, thank you Jennifer Mayer for your help in organizing the paper into a cohesive story.